Whitney Tilson’s email to investors discussing Tesla‘s FSD software.

Q1 2021 hedge fund letters, conferences and more

Tesla And Autonomy

My analyst Kevin DeCamp has been very bullish – and very right – on Tesla since he bought the stock in 2012 and a Model S in 2014 (both of which he still owns, along with a new Model Y – hence my nickname for him: “100-bagger”)!

He’s done a lot of research on autonomous vehicles and Tesla’s full self-driving, which he’s summarized in his report below. Here’s his conclusion:

Tesla has clearly taken a hard path for achieving autonomy, but it’s a scalable one that lends itself to intermediate products along the way. Critics say that Musk had no choice but to only use cheap sensors because he needs to sell affordable cars, but what if this approach gets to Level 5 autonomy first? How many lives will be saved? Already, crash data from Tesla’s Autopilot – which is standard on all of its cars – show that it’s already saving lives, and it will only get better over time. Although controversial because of its non-camera based driver monitoring system, I can say with confidence that my own experience with Autopilot makes me a safer driver and the founder of Waymo, Sebastian Thrun, feels the same way about his Tesla.

Tesla’s camera vision approach aligns perfectly with its first principles DNA in that it is the simplest solution possible – if it works. As Musk has said many times, “the best part is no part” – if we can train computers through deep learning to see and perceive “as plain as day” with 360-degree superhuman vision, then the massive costs of Lidar and HD maps could be unnecessary.

Either way, AVs are guaranteed to be an enormous market – with estimates as high as $7-10 trillion annually. Apple has the largest market capitalization of any company in the world, yet the total global smartphone market is less than $1 trillion. There will be hundreds of billions invested in this sector over the coming years, and many ways to make money. Don’t miss Whitney Tilson’s TaaS (Transportation as a Service) video to learn how to take advantage of this massive trend.

Lastly, if you can gain enough conviction on Tesla’s camera vision approach – as I have – I think there is still substantial upside for the stock, even at a ~$570 billion market cap, because the world – for the reasons I laid out – is doubting Tesla and Musk once again. Stay tuned…

If you have questions or comments for him, please email him directly at [email protected]

Tesla’s FSD Software: Revolutionary or Vaporware?

As I wrote in my last essay a few months ago on Tesla’s future, 2021 is an extremely important year for the company’s autonomy goals and its full self-driving (FSD) software rollout. As usual, there is a lot of controversy surrounding Tesla in general – and the debate about FSD is particularly heated. Scrolling through Twitter, opinions range from CEO Elon Musk is a god and Tesla’s lead in autonomous vehicles (AVs) is unassailable, to Musk is a fraud and the $10,000 FSD option is vaporware. To add to the confusion, the technology of self-driving cars is incredibly complex and can’t be easily understood by watching a quick YouTube video (isn’t that how we all learn these days?)

I’d like to address this difficult subject by doing a deep dive into Tesla’s approach to AVs compared to the rest of the industry. Given that the market for AVs will be worth many trillions of dollars, I think the effort is worth it. In addition, in the process we will build a basic understanding of artificial intelligence (AI) – which will affect almost every industry and by one estimate will add as much as $13 trillion to global output by 2030.

Overview of Lidar/HD maps vs. Tesla’s computer vision approach

Lidar (“light detection and ranging”) determines distances by targeting an object with lasers and measuring the time for the reflected light to return to the receiver. Lidar generates photons in the infrared range of the electromagnetic spectrum, which is why it’s not visible to the human eye. Lidar sensors are extremely useful for AVs because they allow the car to construct a live high-resolution 3D map of its surrounding environment.

HD (high-definition) maps are highly accurate 3D maps used specifically for AVs that contain details not normally present in traditional maps – lane markings, street signs, traffic signals, etc. - and have precision down to the centimeter level. AVs using this technology such as Alphabet’s (GOOGL) Waymo use very powerful onboard computers to cross-check these HD maps with Lidar data to enable extremely accurate spatial localization.

Lidar also enhances object recognition by increasing a camera’s ability to recognize objects because of the context that Lidar’s high-resolution 3D map provides. Although most autonomy companies use other sensors such as radar and cameras, the Lidar/HD map combination form the technological backbone of most AV companies such as Waymo, Cruise, and Argo AI.

Tesla, however, has chosen to go a different route, avoiding both Lidar and HD maps. Its system, instead, focuses primarily on computer vision (CV) using cameras and AI. Tesla contends that extremely good computer vision is necessary for autonomy because of the way our roads are designed and, once it achieves this incredibly difficult objective, Lidar will be unnecessary and a waste of resources. Tesla also claims to have the ability – through a “pseudo-lidar” approach – to create sufficient 3D depth maps using only cameras and deep learning (a form of AI), which provides spatial localization and object recognition at a much lower cost than Lidar and HD maps. To quote Musk:

Lidar is a fool’s errand…..anyone relying on Lidar is doomed……doomed. Expensive sensors that are unnecessary. It’s like having a whole bunch of expensive appendices – like one appendix is bad, but now you want a whole bunch of them? That’s ridiculous……you’ll see.

This is music to most Tesla bulls’ ears, but what do AV industry experts think of his statements?

Industry experts on Tesla’s approach

To put it mildly, most of the industry is highly skeptical of Musk’s claims. Chris Urmson, co-founder and CEO of Aurora, a self-driving technology company, recently commented on Tesla’s technology, “It’s just not going to happen. It’s technically very impressive what they’ve done, but we were doing better in 2010.” (when he worked at Waymo).

Austin Russell, CEO of Luminar (LAZR) – a Lidar startup – comments:

Our 50 other commercial partners and seven of the top 10 largest auto makers in the world would probably disagree with Elon along with pretty much every expert in the industry….What Tesla has today, what they call full self-driving you don’t need lidar for. The problem is that it’s not full self-driving (laughs), it’s actually not self-driving at all. That’s where I think they have drawn huge criticism in the industry with this rogue branding approach to what is being delivered. Lidar gives you a true 3D understanding, not a 2D understanding. Instead of keeping your hands on the wheel and eyes on the road at all times, take your hands and eyes off, use your phone, read a book, watch a movie. Actually autonomous.

I could continue quoting others in the industry, but that sums up the general consensus from experts – or at least competitors with a completely different approach. But, what about Tesla’s actual progress on solving full self-driving?

Elon Musk’s promises

Musk has undoubtedly disappointed many investors and customers with his autonomy claims and missed timelines - giving plenty of ammo to Tesla bears and skeptics.

Here are a few:

- In 2016, Musk claimed that all Tesla’s being produced had all the hardware for Level 5 autonomy – a system capable of performing all driving tasks in all conditions – with only a software update needed and promised a coast-to-coast self-driving demo in 2017.

- In February 2019, Musk claimed in a podcast that by the end of 2020, Tesla’s autonomy would be so capable that drivers would be able to snooze in the car while it took them to their destination.

- In April 2019, during Tesla “Autonomy Day” Musk said, “fast forward a year, maybe a year and three months – but next year for sure – we will have over a million robotaxis on the road.” He added that in “probably” two years (2021) Tesla will make a car with no steering wheels and pedals and projected that the net present value of each of the company’s cars, used as a robotaxi, would be worth $200,000 “conservatively” because the car could potentially earn its owner $30,000 a year.

Fast forward to today and Tesla is obviously way behind Musk’s 2019 timeline – the last I checked, all Teslas still have steering wheels and pedals and my Model Y isn’t worth $200,000!

That said, Tesla has released an “FSD beta” to a relatively small number of Tesla customers (perhaps 1,000-2,000) that the company claims is “feature complete,” meaning that it can execute most routine drives with few or no interventions.

Are we collectively so jaded by Musk’s grandiose claims that we are ignoring a major step forward in AVs?

Current state of the industry

Musk certainly deserves criticism for the way he has handled expectations on autonomy, but let’s not forget about the rest of the industry’s missed timelines. Most notably, Google’s Co-founder Sergey Brin promised self-driving cars “for all” within five years… in 2012.

Waymo – formerly Google’s self-driving car project – is widely viewed to be the leader in the space and is the first company in the U.S. with a truly commercialized driverless service without safety backup drivers, which has operated since October 2020 (though it is limited to a small geofenced area (Level 4) of approximately 50 square miles in Chandler, Arizona). This is impressive, but a far cry from Brin’s promise.

Waymo hasn’t announced when it will expand to other cities and the CTO Dmitri Dolgov was very tight-lipped about the strategy and speed of the rollout in a recent interview. Dolgov recently became co-CEO (with Tekedra Mawakana) when the former CEO, John Krafcik, stepped down unexpectedly. In addition, Waymo’s long-time chief financial officer and its head of automotive partnerships and corporate development are departing this month. Does this sound like a company on the verge of dominating a multi trillion-dollar industry?

This recent podcast with a few leaders in the AV industry also leads me to believe that large-scale AV deployments using Lidar/HD maps will not happen in the near future. This would explain why there has been a consolidation in the industry to absorb the massive costs associated with getting to the starting line and beginning to generate revenues.

When you take into consideration Musk’s missed timelines and the AV industry’s glacial progress, it’s not a stretch to conclude that self-driving cars are one of the greatest technical challenges of all time. One of the biggest difficulties is that Lidar and HD mapping are both very expensive, which makes this approach difficult to scale. Waymo ordered “up to” 80,000 vehicles in 2018, but has only taken delivery of around 600. Tesla has over one million vehicles – adding the equivalent of more than three Waymo fleets every single day (~2,000 cars) - with eight cameras collecting and processing data with its in-house-designed FSD chip – whether the customer purchases the FSD option or not. In addition, Tesla makes money on every single one of these vehicles, with a gross margin currently around 22%, among the best in the auto industry. If Tesla is right that computer vision and AI can eventually solve AVs, then it is not difficult to make the case that it could easily catch up and overtake its competitors.

In a series of interviews on Dave Lee’s YouTube channel, AI/machine learning expert James Douma breaks down all of the components of Tesla’s autonomy technology stack in great detail and at one point makes an analogy between Tesla’s AV efforts and the invention of human flight over a 100 years ago. Interestingly, when the Wright brothers conquered flight, the world barely noticed. Flight was considered so impossible that people didn’t believe it even when it happened. Given all of these empty promises and failed timelines, could we collectively be experiencing something similar?

Tesla Vision

When Andrej Karpathy, Tesla’s director of AI and Autopilot Vision, started his PhD at Stanford in 2011, computer vision was primitive by today’s standards. When he would run state-of-the-art visual recognition detectors, it wasn’t uncommon to get bizarre outcomes in which the computer, for example, would detect with 99% confidence cars in trees, as you can see in this screenshot:

“And you would kind of just shrug your shoulders and say, ‘that just happens sometimes…’” Karpathy said to a laughing audience during a presentation at OpenAI in 2016.

When you begin to understand how a computer “sees,” it becomes clear how this might happen.

An image to a computer is just a matrix of pixels like a chess board, with each pixel assigned a number that determines the brightness value at that point. In a grayscale image, the number is 0 if the pixel is black and 255 if the pixel is white. The numbers from 0-255 represent different shades of gray. In a color image, each pixel has 3 different numbers representing different intensities of red, green, and blue. So a one-megapixel color image is a grid of 1,000 X 1,000 pixels with each pixel having a red, green, and blue value.

As a result, the core challenge of computer vision is going from a grid of pixels and brightness values to high-level concepts like recognizing cars, dogs, pedestrians, lane lines, drivable space, etc. This is an enormous challenge even just for static images, let alone moving objects and the safety concerns involved with navigating a two-ton vehicle. When you consider the state of computer vision in 2011, the spatial localization and safety provided by Lidar and HD maps was an obvious choice when early work on Waymo started in 2009.

However, everything changed in computer vision in 2012 when a neural network that was trained through deep learning won the ImageNet Challenge, beating the runner up by over 10 percentage points. ImageNet is a database of over 14 million hand-annotated images designed for visual image recognition software research. Although deep learning has been around since at least the 1980s, this decisive win essentially put it on the map for computer vision and today experts wouldn’t even consider doing any serious image recognition task without deep learning.

Deep learning and neural networks

Computer vision was difficult with older techniques because of the challenge of writing software with instructions for identifying different objects given all the variables – background, lighting, viewpoint, etc. When you consider that an image is just a grid of numbers for a computer, writing a program even just to reliably tell the difference between a dog and a cat is extremely difficult.

Neural networks, also known as artificial neural networks, are a subset of machine learning, which in turn is a type of AI. Deep neural networks help solve computer vision because they can learn by example through a process called deep learning. Using a training process with a large dataset of labeled images, a neural network can learn the difference between a dog and a cat without the need for human written code – largely writing the code itself. The key requirement is a very large and varied dataset. Although a child can learn what a fire hydrant is with just a few examples, a neural network requires thousands of different examples.

One of the limiting factors is computing power. In fact, one of the reasons that deep learning aced the ImageNet challenge in 2012 is because the team that won leveraged the growing computing power of GPUs (graphics processing units) over CPUs (central processing units), which greatly improved the processing speeds for its neural networks. Tesla’s FSD chip includes two neural processing units, each capable of peak performance of 37 trillion operations per second (TOPS) while running at only 100 watts. Version two of this FSD chip with improved specifications will likely be ready in a year or two.

Another limiting factor is the human labeling needed for such a massive dataset. Tesla is currently in the process of designing and developing a “dojo” supercomputer which will assist in the labeling and training process. One of the bottlenecks Tesla has encountered is the massive time and resources needed to label individual images. By having Tesla’s data team label video data instead of individual frames, dojo will then parse out these video labels into individual frames speeding the labeling and training process up significantly.

There are also other ways to label data where the process is more automated. For example, “imitation learning” involves sourcing trajectories that human drivers take through intersections and training the neural network on those paths. In this case, the data is “labeled” automatically by the driver. Comma.ai – an AV startup – has taken this approach to the extreme and has vowed to eventually achieve Level 5 autonomy using this “end-to-end” deep learning technique in which all of the “labeling” is done by the human drivers and the neural network essentially learns all driving tasks through imitation learning.

Just as Tesla is moving into battery manufacturing to address the biggest constraint for its goal of producing 20 million EVs a year by 2030, it is addressing the constraints for a truly advanced computer vision system that is necessary for Level 5 autonomy. Keep an eye out for more details on these strategic moves on Tesla’s AI day, tentatively scheduled later this year.

Tesla's fleet advantage: Large, varied, and real dataset

When you consider the large datasets needed to train neural networks even for the simplest image-recognition tasks combined with the enormous visual variability on our roads, it becomes obvious why most AV companies believe that Lidar and HD maps are necessary.

However, the challenge of this approach is the enormous cost of scaling – how do you break out of the geofenced box given the cost of the fleet, the Lidar sensors, the safety drivers, and the cost and difficulty of maintaining HD maps? Again, Tesla gets paid to add more than three Waymo fleets to the road each day. Waymo’s cars are essentially line-following robots on virtual rails that are confined to a safe space. They will never learn to drive everywhere in any condition (Level 5) if their experience remains so limited. As of April 2020, Teslas on Autopilot had logged 3 billion miles, while Waymo last reported 20 million, more than 99% fewer. Sure, both companies also use simulation, but this can never mirror the real world in all its complexity. Admittedly, Waymo’s 20 million miles are truly autonomous, but what’s important here is the amount and variety of data that Tesla has access to across 150 times more miles.

As Andrej Karpathy said in Tesla’s Autonomy Day presentation, “Lidar is really a shortcut – it sidesteps the fundamental problems, the important problem of vision recognition that is necessary for autonomy. And so it gives a false sense of progress, and is ultimately a crutch. It does give really fast demos though…”

To be clear, Waymo also uses AI and deep learning for its vehicles, but it’s not the foundation of its approach. As AI expert Lex Fridman put it, “For Waymo, deep learning is the icing on the cake; for Tesla, deep learning is the cake.”

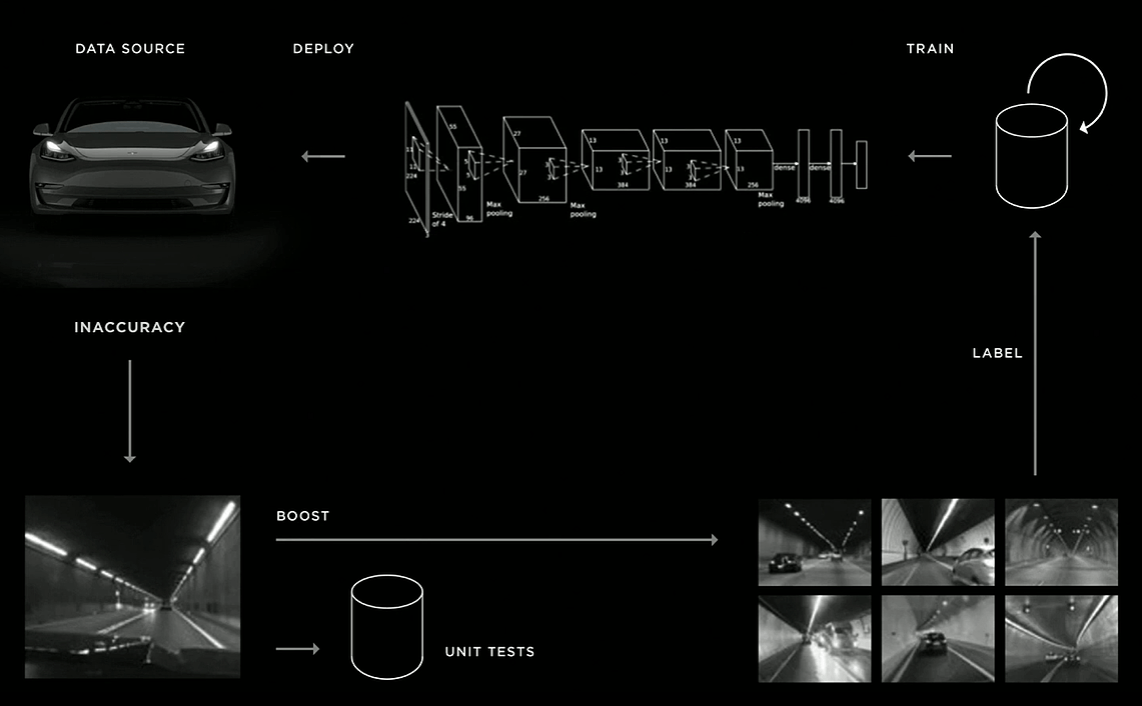

Tesla’s cars are everywhere gathering data, but not all the data coming from its fleet is useful. Tesla has mechanisms to source and curate the most useful data through a trigger infrastructure that flags examples when the neural net is uncertain, or a driver intervenes and takes over. Also, it can gather from the dataset a large collection of images of a particular object that is confusing the neural network – a bike on the back of a car, for example. Then, it can correctly label these images, incorporate them into the dataset, retrain the network, redeploy, and iterate repeatedly – a process it calls the “data engine.”

Ironically, just as Google gained an early lead with its search engine by having that first-mover advantage and the positive flywheel of data that helped train its own AI – every time you do a Google search, you are helping train it – the same logic applies to Tesla’s approach to autonomy – if it’s right about computer vision.

A true Level 5 autonomous car will need to handle every situation safely 99.9999% of the time. Attaining those last 9s of reliability is going to be incredibly difficult, but I predict that the first company to do it will be one with an enormous, real world dataset – namely, Tesla.

Another reason to think that Tesla is likely to emerge as the winner is that it’s consistently rated among the most attractive firms for engineering students. Where do you think the top data scientists and machine learning engineers want to work? The company with a hundred times the amount of real-world data than the rest of the industry combined, or one that confines itself to a geofenced area in the name of safety while 3,700 people die globally in traffic accidents per day?

Pseudo-Lidar

A fair criticism of a camera vision-only approach is that it doesn’t produce the depth perception and real-time precise 3D maps that Lidar does. This criticism is becoming less and less valid over time, however, as neural nets can determine depth with increasing accuracy through a process called “self-supervised learning.” Basically, you feed raw videos into the neural network and it can teach itself – without any human input – depth through known geometric functions like Structure from Motion (SfM) and Simultaneous Localization and Mapping (SLAM).

Thus, Lidar’s main advantage of accurate localization/3D mapping is diminishing – and deep learning is accelerating this trend. In addition, Lidar often fails in heavy rain and with very bright objects. It even has difficulty seeing black cars due to low reflectivity or knowing the difference between a plastic bag and a tire. Supposedly, one of the major benefits of Lidar is its ability to see in the dark, but in the real world we use headlights so I’m not sure this is much of an advantage. Not only is Lidar expensive, but it’s also complex and very difficult to mass produce – especially at automotive grade levels. Lastly, there are remaining issues with Lidar that may get worse as more AVs are on the road – interference between Lidars from different vehicles and eye safety issues that are related to the tradeoffs of using near-infrared wavelength Lidar vs. short-wave infrared Lidar.

If cameras and AI can eventually generate depth maps with pseudo-Lidar that are accurate enough for safe autonomy, then Lidar won’t be necessary and Tesla is likely to achieve Level 4 and then Level 5 autonomy way before anyone else.

Of course, there is the chance that once Waymo and/or other AV companies conquer the complex city environments where they are currently testing, they will expand much more rapidly than I anticipate. Will Tesla’s neural networks close this depth perception gap faster than these Lidar/HD maps AV companies break out of these geofenced areas? This is the trillion-dollar question…

Mobileye

One company that has a very good chance of rivaling Tesla in data is Mobileye, which was acquired by Intel (INTC) in 2017. Over 60 million vehicles are equipped with some form of its ADAS (advanced driver assistance systems). The company has acknowledged the non-scalability of HD maps and developed its own type of map called “AV maps” that assist in navigation and perception. I am impressed with Mobileye’s ambition and technology offerings, but because of the company’s lack of vertical integration and rapid OTA (over-the-air) update iteration cycles that Tesla employs, I think Mobileye will have a hard time catching up to Tesla using its deep learning approach. I believe this is the reason Mobileye has chosen to use Lidar for robotaxis, even though it demonstrated the technological viability of a camera-only system in a video demo last year.

Comma.ai

An approach that I would not count out is the “end-to-end,” imitation deep learning employed by Comma.ai. The founder, George Hotz, was allegedly approached by Musk to rewrite Tesla’s Autopilot software in 2015. After a contract dispute, Hotz bailed and went on a mission to solve autonomy himself and used his unfinished work for Tesla to build the foundation of his company. He now claims that its OpenPilot software is the “Android” to Tesla’s “iOS” because it’s available for 100 different car models and currently has over 2,000 daily active users. Already, this scrappy startup has doubled Waymo’s last reported fleet miles with 40 million logged. I consider it the only true competitor to Tesla’s Autopilot. If Apple acquired Comma.ai, I would definitely need to reconsider my prediction of Tesla achieving Level 5 autonomy first.

Might both approaches be viable paths?

There are many different possible outcomes, but it’s conceivable that these geofenced Level 4 solutions continue to expand slowly for ride-hailing services and Tesla eventually achieves Level 5 autonomy as the first self-driving vehicle available to consumers. I think the market is large enough for both approaches to be successful, and that this is the most likely outcome.

However, there is a chance that the Lidar/HD map approaches are still a decade or more away from achieving scale and Tesla’s data flywheel accelerates its progress to the point where it is clear to the industry (and the stock market) that it is the only viable medium-term solution to Level 5 autonomy. In this scenario, Comma.ai would definitely be an acquisition candidate or Hotz may choose to stay independent and sell access to its data – an option he seems open to.

Deep dive on deep learning

Although this report covers a lot of material, we have barely scratched the surface. To learn more, I highly recommend watching the entire playlist of Dave Lee’s interviews with machine learning expert James Douma, as well as Andrej Karpathy’s many presentations on YouTube.

Conclusion

Tesla has clearly taken a hard path for achieving autonomy, but it’s a scalable one that lends itself to intermediate products along the way. Critics say that Musk had no choice but to only use cheap sensors because he needs to sell affordable cars, but what if this approach gets to Level 5 autonomy first? How many lives will be saved? Already, crash data from Tesla’s Autopilot – which is standard on all of its cars – show that it’s already saving lives, and it will only get better over time. Although controversial because of its non-camera based driver monitoring system, I can say with confidence that my own experience with Autopilot makes me a safer driver and the founder of Waymo, Sebastian Thrun, feels the same way about his Tesla.

Tesla’s camera vision approach aligns perfectly with its first principles DNA in that it is the simplest solution possible – if it works. As Musk has said many times, “the best part is no part” – if we can train computers through deep learning to see and perceive “as plain as day” with 360-degree superhuman vision, then the massive costs of Lidar and HD maps could be unnecessary.

Either way, AVs are guaranteed to be an enormous market – with estimates as high as $7-10 trillion annually. Apple has the largest market capitalization of any company in the world, yet the total global smartphone market is less than $1 trillion. There will be hundreds of billions invested in this sector over the coming years, and many ways to make money. Don’t miss Whitney Tilson’s TaaS (Transportation as a Service) video to learn how to take advantage of this massive trend.

Lastly, if you can gain enough conviction on Tesla’s camera vision approach – as I have – I think there is still substantial upside for the stock, even at a ~$570 billion market cap, because the world – for the reasons I laid out – is doubting Tesla and Musk once again. Stay tuned…