Even for humans, it is somewhat difficult to single out a voice in a crowed place, and for computers, it is almost next to impossible. But, not now. A new Google AI tech can pick out specific voices based on people’s faces when they’re speaking.

For this new Google AI tech, the team taught its neural network model to identify people speaking by themselves. Then the team created virtual “parties” full of voices to train Google AI to distinguish multiple voices into separate audio tracks. The result of this hard work is truly remarkable.

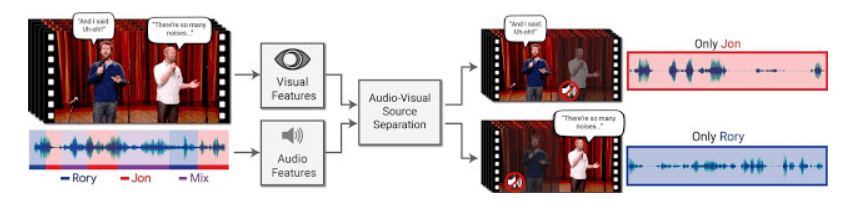

“In this work, we are able to computationally produce videos in which speech of specific people is enhanced while all other sounds are suppressed,” say Google researchers in a blog post.

Google even released a video to prove its research. As can be seen in the video, the new Google AI tech was able to generate a clean audio track for one person even when two were competing with each other. Further, the search giant claims that the tech would even work if a person partially hides their face with hand gestures or a microphone.

“Our method works on ordinary videos with a single audio track, and all that is required from the user is to select the face of the person in the video they want to hear, or to have such a person be selected algorithmically based on context,” the search giant says in the blog post.

Separating two voices is comparatively easier when the two speakers have different voices, i.e., the bigger the pitch difference between the two voices, the better would be the results. However, what if the tech is used to splice two videos of the same speaker? Google did answer that by an example video (removed now). The video overlaid two of Sundars audio with a few irregularities, but still, the result was nothing less than impressive, according to Android Police.

Google has detailed its research in a paper titled Looking to Listen at the Cocktail Party. The name surely signifies how people are able to focus on one audio despite plenty of background noises in a cocktail party.

Currently, the search giant is exploring ways to integrate this new Google AI tech into its products. This new feature would be a valuable addition to the video chat services like Hangouts or Duo. The tech would not only help to listen to the person you want to in a crowded room, but would also complement video recording. On the other hand, Google’s new tech could also trigger privacy issues.

“Our method can also potentially be used as a pre-process for speech recognition and automatic video captioning. Handling overlapping speakers is a known challenge for automatic captioning systems, and separating the audio to the different sources could help in presenting more accurate and easy-to-read captions,” the search giant says.

Google uses machine learning in almost every product, be it image search or translation tools. The machine learning helps computers to see, listen and speak pretty much the same way we humans do. For instance, an object with four legs and a tail will be an animal, and if it has horns, the number of choices reduces. Over time, computers get better at recognizing images when they process millions of such images.

Similarly, Google uses machine learning in household products such as the Nest. The machine learning helps these products to get better in predicting how and when the owners want to use certain products, like what room temperature they are comfortable with.

Apart from these applications, the search giant is also using the technology to address several environmental issues. Google’s sustainability lead, Kate E. Brandt, spoke to Forbes of such projects that the search giant is a part of. “We’re seeing some really interesting things happen when we bring together the potential of cloud computing, geo-mapping and machine learning,” she said.

One such project is saving vulnerable marine life. Under this, Google’s machine learning algorithms with the help of the publicly broadcast Automatic Identification System for shipping is able to locate illegal fishing activity. Computers are able to accurately “recognize” what a ship is doing by plotting a ship’s course and comparing it with the usual patterns of the ship’s movement.

“All 200,000 or so vessels which are on the sea at any one time are pinging out this public notice saying ‘this is where I am, and this is what I am going,’” Brandt said.