With artificial intelligence taking a major leap in recent years and estimates showing it will likely grow even more, we have seen many discoveries which could do more harm than good. One such example is the text-generator developed by OpenAI. The machine learning algorithm can turn only a small portion of text into lengthy and convincing paragraphs. Now MIT has collaborated with IBM’s Watson AI lab to develop a machine learning algorithm to fight AI-generated text like that generated by OpenAI’s algorithm.

Language models are growing in popularity, and many of them are capable of effortlessly processing natural human language. It all started with predicting the next word users were preparing to type, first through auto-correct and then by offering suggestions based on the word the user just typed. For example, the Gmail app can try to predict the next word users want to say while writing an email an email.

Language models have now improved dramatically, leaving plenty of room for manipulation. In other words, people with malicious intents could use text generators to spread propaganda or false information. However, a new forensic machine learning algorithm does an excellent job at recognizing AI-generated text. It’s called GLTR, and it accurately predicts automatically-generated text. It uses the same language model as OpenAI’s text generator, which is GPT-2 117M.

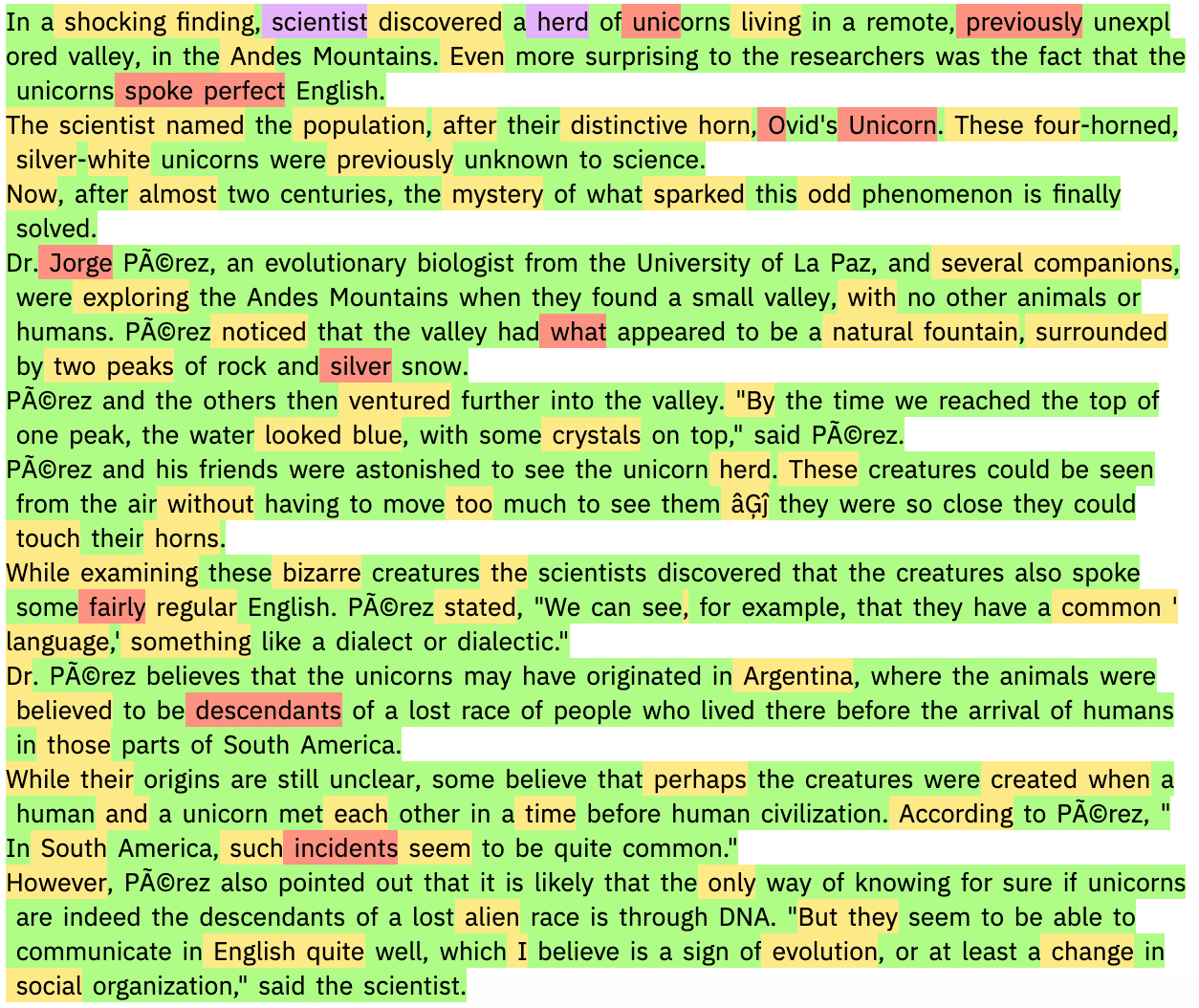

The model ranks words based on which ones are most likely to be used. The top 10 most common words are highlighted in green, while the words which fall into the top 100 category are highlighted in yellow. Red highlights the top 1,000 words, and the rest of the words are highlighted in purple. This method gives researchers visual insight into how likely each word was to be used.

The model also boasts several histograms which process information across the entire provided text. The first histogram determines the number of words listed each category in the text. The second histogram can find connections between each word and the word that follows it to determine the probability ratios for every word following another. The last histogram “shows the distribution over the entropies of the predictions,” according to the press release about the algorithm. If the uncertainty is low, that means that the model confidently made predictions. However, high uncertainty demonstrates that the text is harder to predict and thus more uncertain.

The researchers tested their algorithm on the AI text-generator developed by OpenAI. The first sentence was the prompt used by the model to generate the text, while the rest was generated by OpenAI’s artificial intelligence. At first glance, the text seems realistic, and it’s difficult to determine whether human or machine generated it. However, by running the text through MIT’s algorithm, we can see that most of the words used are those the AI would expect to see (highlighted in green). This suggests a human didn’t write it.

Many improvements are still needed for the GLTR machine learning algorithm. Its main flaw is its limited scale, the researchers said in their press release. More importantly, it requires thorough knowledge of language to determine whether each word is listed as common or uncommon. Researchers hope that despite the model’s imperfections, it can be vastly improved and used to detect AI-generated text and other false test. To learn more, you can check out the live demo here.