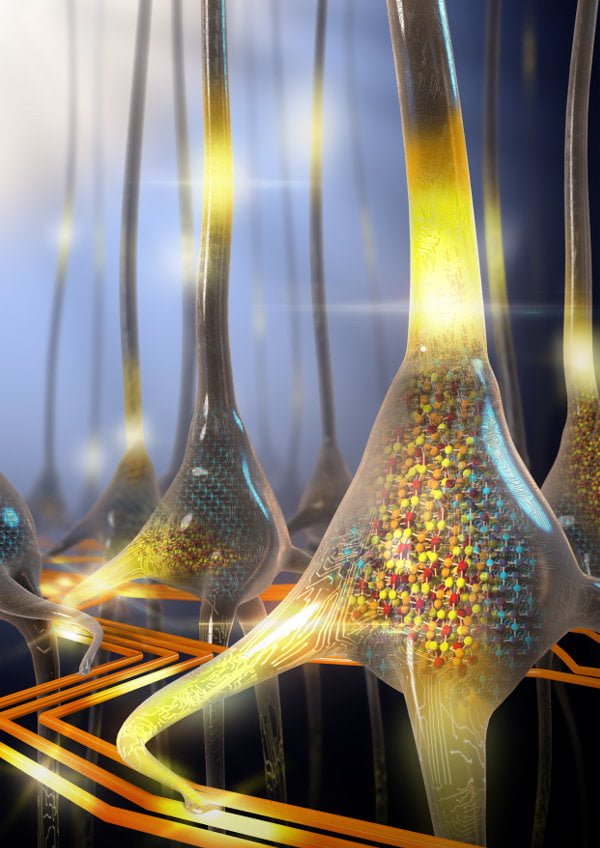

Researchers from IBM are developing new brain-inspired architecture that will be able to handle larger the data loads resulting from artificial intelligence. The researchers’ designs resemble the way the human brain works.

Their design and findings were detailed in the Journal of Applied Physics. Scientists want to use the design in the new generation of computers. Most computers in use today are built based on the von Neumann architecture, which was developed in the 1940s. Von Neumann-based computers are equipped with a central processor capable of executing logic and arithmetic operations, a memory unit, storage and input-output devices.

The new approach uses a brain-inspired architecture with a coexisting processing and memory unit. Study author Abu Sebastian explained in a statement that when a computer executes certain tasks in its memory, the system’s efficiency will increase.

“If you look at human beings, we compute with 20 to 30 watts of power, whereas AI today is based on supercomputers which run on kilowatts or megawatts of power,” Sebastian said in a statement. “In the brain, synapses are both computing and storing information. In a new architecture, going beyond von Neumann, memory has to play a more active role in computing.”

The team from IBM designed three levels of the new computer inspired by the human brain. The first level exploits the memory device’s state dynamics, which allows the computer to perform tasks in its memory. That’s how memory and processing in the brain work. The second level is inspired by the synaptic network structures in the brain; it speeds up the training of deep neural networks using arrays of phase change memory devices. The last level includes substrates for making neural networks interact, and the researchers were inspired by the dynamic and stochastic nature of neurons in building it.

The team used electrical pulses to modulate the ratio of material in the crystalline and amorphous phases to enable the memory devices to support consistent electrical resistance or conductance. This approach better resembles nonbinary, biological synapses and enables more information to be stored in a single memory unit. Sebastian and his colleagues at IBM found great results from this approach using human brain-inspired architecture, including the efficiency of those systems.

“We always expected these systems to be much better than conventional computing systems in some tasks, but we were surprised how much more efficient some of these approaches were.”

Last year the team used an unsupervised machine learning algorithm on a typical computer of today and a prototype memory platform inspired by the phase change memory device.

“We could achieve 200 times faster performance in the phase change memory computing systems as opposed to conventional computing systems.” Sebastian said. “We always knew they would be efficient, but we didn’t expect them to outperform by this much.”

The team is now working on new prototype chips and systems based on their brain-inspired architecture.