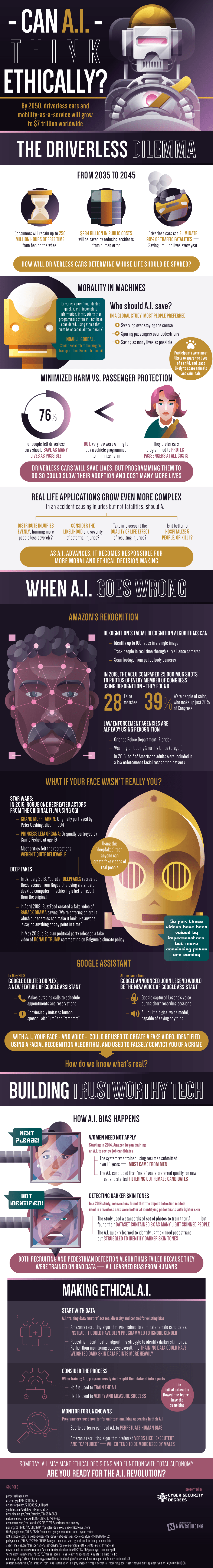

By 2050, driverless cars and mobility as a service will grow into a $7 trillion worldwide. Driverless cars have many benefits and from 2035 to 2045 consumers will regain up to 250 million hours of free time that was previously spent from behind the wheel, $234 billion in public costs will be saved by reducing accidents and damages due to human error, and driverless cars can eliminate 90% of traffic fatalities – saving 1 million lives every year. But in cases of a crash, how will driverless cars determine whose life is to be spared?

Driverless cars “must decide quickly, with incomplete information, in situations that programmers often will not have considered, using ethics that must be encoded all to literally” said Noah J. Goodall, Senior Research at the Virginia Transportation Research Council. In a global study, most people preferred A.I. to swerve rather than stay on course, spare passengers over pedestrians, and that the A.I. should save as many lives as possible in any case. Participants were most likely to spare the life of a child, and least likely to spare animals and criminals. 76% of people felt driverless cars should save as many lives as possible, but very few were willing to buy a vehicle programmed to minimize harm and they prefer cars programmed to protect passengers at all costs.

Q2 hedge fund letters, conference, scoops etc

Driverless cars will save lives, but programming them to do so could slow their adoption and cost many more lives. Real life applications grow even more complex - in an accident that causes injuries but not fatalities, should A.I. distribute injuries evenly, harming more people less severely, or should it consider the likelihood and severity of potential injuries, or should it take into account the quality of life affect of resulting injuries, or should the A.I. have to decide if it is better to hospitalize 5 or should it kill 1?

But sometimes A.I. can also go wrong, and when it does it is not good. Amazon had Rekognition; Rekognition’s facial recognition algorithms can identify up to 100 faces in a single image, track people in real time through surveillance cams, and can even scan footage from police body cameras. But in 2018, the ACLU compared 25,000 mug shots to photos of every member of Congress using Rekognition - they found 28 false matches and 39% were people of color, who make up only 20% of Congress.

Find out how A.I. is advancing, can copy people, and making ethical A.I. here.