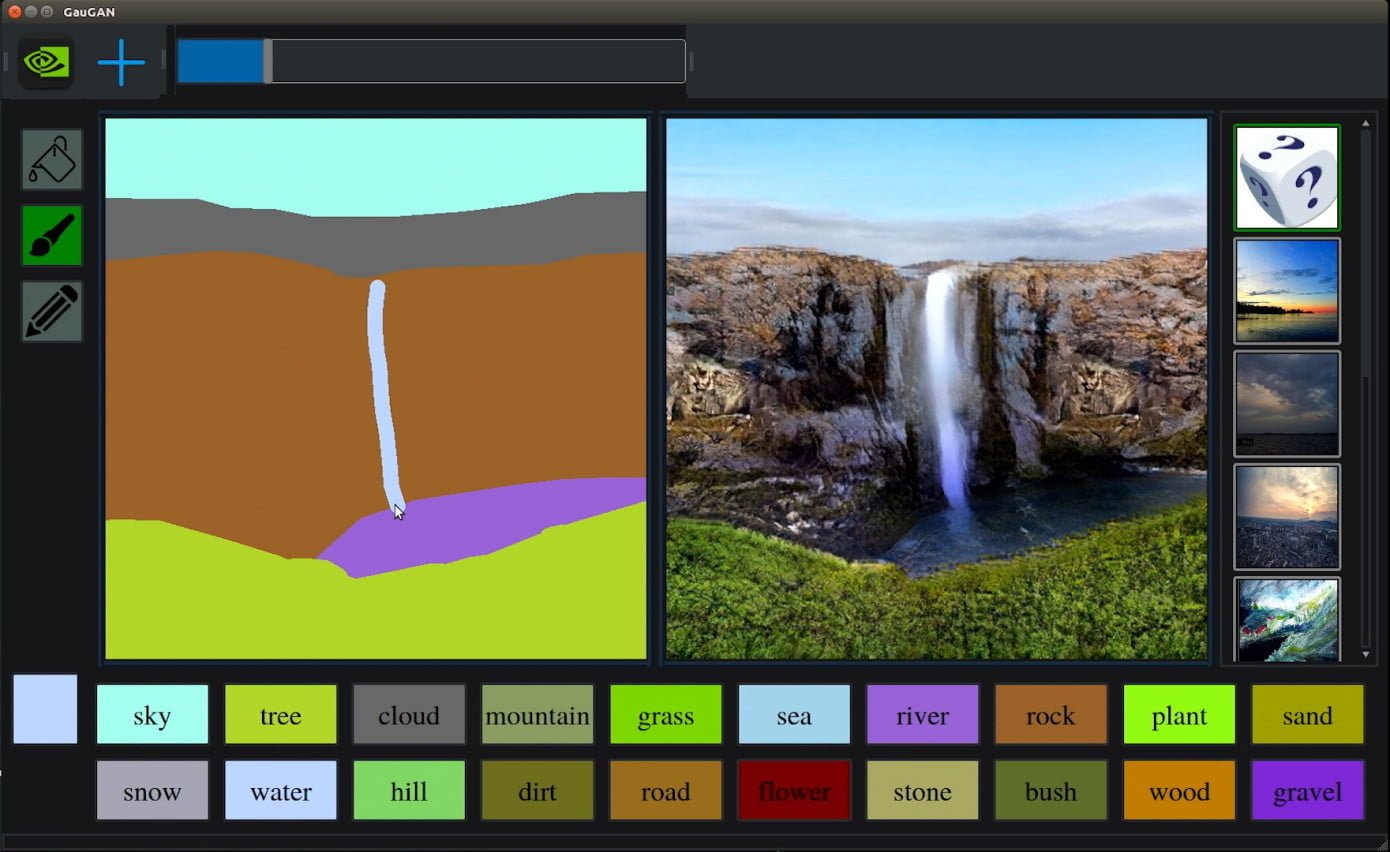

NVIDIA’s AI researchers developed a deep learning model which is capable of transforming doodles into photorealistic landscapes that we might usually see on different Instagram, photography-themed accounts. There are so many details and such high texture quality that we can easily get fooled into thinking it was actual photography. NVIDIA’s AI uses generative adversarial networks (GANs) to convert sketches into images.

NVIDIA’s AI which uses the developed model greatly contributed to the image transformation, and is called GauGAN. It’s a powerful tool for creating lifelike worlds from which artists, architects, game designers and many others can benefit. Using these models could greatly contribute to professionals’ future projects and their development.

“It’s much easier to brainstorm designs with simple sketches, and this technology is able to convert sketches into highly realistic images,” Bryan Catanzaro, vice president of applied deep learning research at NVIDIA said in a statement.

Catanzaro refers to the innovative technology as “smart paintbrush” which can use precise details to fill in the segmentation maps, and make a convincing location of the objects that exist into the scene. Many of us like to draw and often want to copy the work some great artist has done. Unfortunately, the result likely looks more like the first image in the comparison, rather than a masterpiece. NVIDIA’s AI does the opposite, by transforming sketches into photorealistic landscapes. The AI model allows users to make their own segmentation maps and choose how the scene would look like, and then it’ll finish the job.

Its development process was nowhere near easy; it was trained from millions of images before it was able to fill the background in with such mesmerizing details. Based on the images Nvidia has shown and videos on YouTube, the model can draw ponds, trees, rocks, water, sky and much more. Users can change segments from “grass” to “snow” and an entire landscape will change to represent winter.

“It’s like a coloring book picture that describes where a tree is, where the sun is, where the sky is,” Catanzaro said. “And then the neural network is able to fill in all of the detail and texture, and the reflections, shadows and colors, based on what it has learned about real images.”

GauGAN was trained from realistic images so it needed time to learn that the real ponds and lakes also have reflections before it was able to generate mimics of it.

“This technology is not just stitching together pieces of other images, or cutting and pasting textures,” Catanzaro said. “It’s actually synthesizing new images, very similar to how an artist would draw something.”

NVIDIA said that even though GauGAN excels at making land, sea and sky, the deep learning model can also create other landscape features that look more urban, like buildings and roads. The research paper describing the GauGAN app will be presented at the CVPR conference in June and is currently available on the online preprinting archive arXiv.