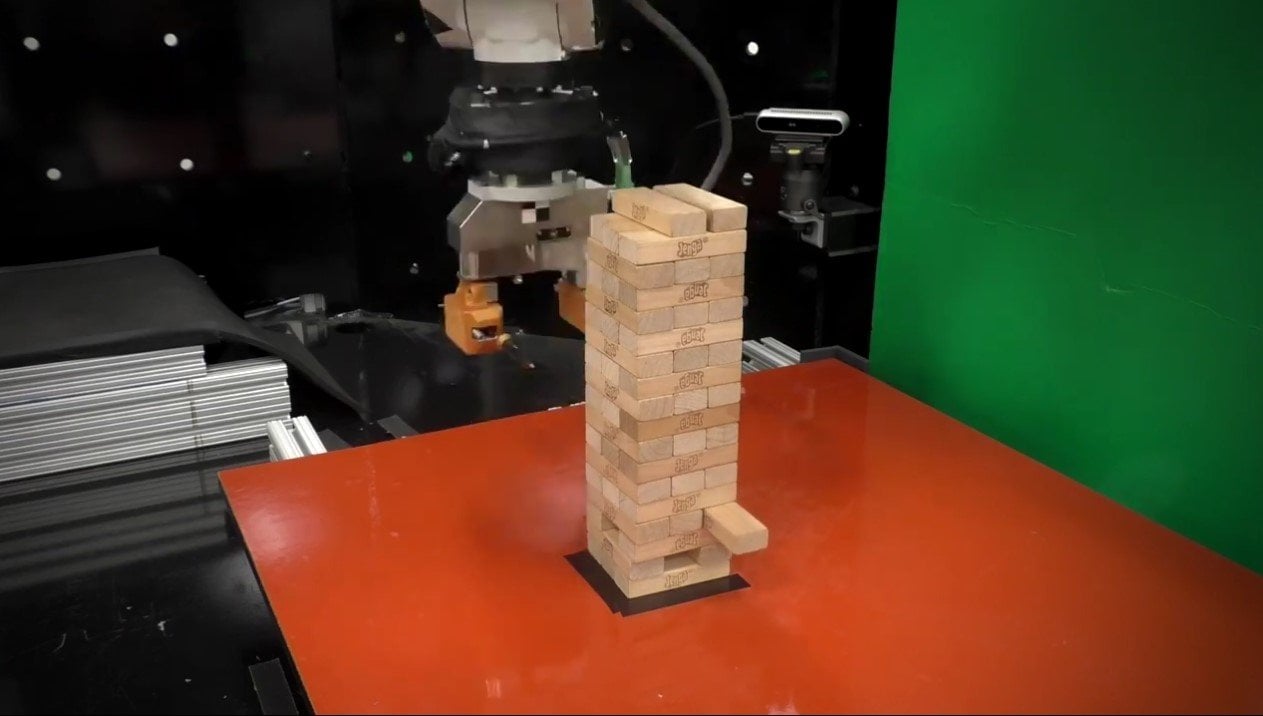

MIT’s new robot uses AI to play Jenga, and so far, it seems to be yielding great results.

Playing Jenga can be time-consuming and difficult. Players must calculate all the possibilities, and test, poke and feel individual wooden blocks before feeling confident enough to pull one out without knocking over the tower. To accomplish a similar feat, MIT’s robot uses a machine learning algorithm to play Jenga.

Using their artificial intelligence, MIT scientists have successfully taught the robot to play the game. The best part is that it won’t get angry if it loses. More importantly, researchers fed MIT’s robot only the basic instructions and rules of the game, giving the robot the freedom to learn on its own from minimal information given. It’s a great advancement in robotics and AI.

The AI and process were described in a paper published in the journal Science Robotics. The researchers described the method by which the robot thoroughly tests the tower’s stability and analyzes the position of each block. After a successful analysis, it moves on to careful extraction of a piece. The process may be a bit slow because the robot can only push or pull the piece one millimeter at a time. This gives its force sensors enough time to predict whether the tower is going to collapse if it made a mistake.

The best part of this approach is that MIT’s robot can learn from its own mistakes while it plays Jenga, thanks to its machine learning algorithm. The study’s senior author, Alberto Rodriguez, told Popular Science that their robot corrects its behavior by “building nuggets of experience.” He also said their robot has already learned what a successful move is.

MIT’s robot plays Jenga in a similar fashion to how humans play it, which is by coming up by a good strategy while predicting the outcome. At all costs, the collapse of the tower must be avoided. Although robots are becoming closer to closer to being equal with humans in some ways, there is no reason to worry. Rodriguez told Popular Science that the contraption is “good enough so that it could play against a human.” However, an expert player could probably beat it.

“This is definitely not a project that was driven by trying to achieve superhuman performance,” he added.

This is not the first attempt to make AI a match for humans at various games. Google’s DeepMind AI recently won almost all the StarCraft II matches it played against two professional gamers. This just shows the true power of AI and how fast it has been advancing toward the level of humans.